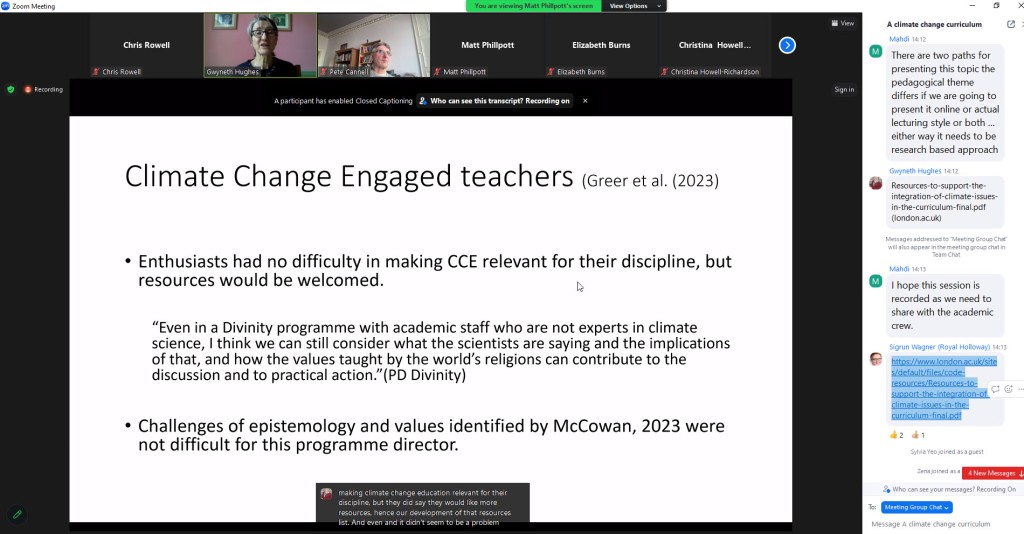

Today I tuned into a webinar given by Dr Gwyneth Hughes and Dr Peter Cannell who respond to the growing interest in climate change education in Higher Education and explore how to address climate change in all disciplines. The webinar also reported findings of a research project using the University of London’s distance and online programmes. The webinar presented the findings from a small survey and interviews with programme directors on how they are teaching climate change in a range of disciplines.

Links to freely available and open access climate change resources were also shared: https://www.open.edu/openlearn/education-development/supporting-climate-action-through-digital-education/content-section-overview?active-tab=content-tab

Here is an AI generated (Claude) summary of the webinar:

Here is a 597-word summary of the key points from the document:

The document is a transcript of a webinar on incorporating climate change into university curricula. The speakers discuss the importance of educating students on climate change, challenges with teaching the interdisciplinary topic, findings from a project examining how climate change is currently incorporated in University of London distance learning programs, and suggestions for improving climate change education.

Key points:

– Climate change is an urgent issue that will impact students’ lives, so universities have a responsibility to educate students on it. However, it crosses many disciplines making it complex to teach.

– A University of London project surveyed distance learning program directors and found mixed opinions on whether climate change should be a separate module vs embedded across curricula. 73% promoted student discussion on climate change but often informally.

– There is limited insight into what distance students already know and want to learn regarding climate change.

– “Climate engaged” educators can connect climate change to their discipline but need time, resources and updated materials to systematically incorporate it into curricula and assessments.

– Transdisciplinary dialogue on climate change helps build understanding. A case study of a divinity program aiming to improve modules and run climate change events showed the value but need for resources.

– Suggestions included: leveraging global partnerships for varied expertise and perspectives; focusing on specific geographies; starting climate change clubs to raise awareness then build out; having core sustainability modules.

– Key obstacles are lack of time, resources and support to update materials and teaching approaches for this evolving, controversial topic. Dedicated curriculum teams would enable more dynamic, discussion-based pedagogy.

In summary, climate change integration is complex but critical. Universities recognize this and more support is needed to systematically incorporate it across curricula, especially for distance learning.